AI Applications in Business: How AI is Transforming Companies

Business intelligence (BI) solutions are critical for an organization’s success. Data must be gathered and analyzed meaningfully to make decisions about the future. But collecting data is only half the battle – it also needs to be transformed into something that can be used for analysis. This is where ETL (Extract – Transform - Load) and ELT (Extract – Load - Transform) come in.

In this blog, we’ll go into more detail about ETL and ELT, their differences and when to use each. As a certified Microsoft Solutions Partner Data & AI (Azure), you can be confident that ProServeIT has the expertise to help you manage your data across multiple systems to build analytics and Artificial Intelligence (AI) solutions.

In this blog, you will find:

Why Data Processing is Vital for Business Intelligence

Primary Methods of Processing Data - ETL & ELT

Exploring ETL and ELT: A Comparative Analysis of Data-Processing Methods

What’s Next? Data Customer Journey

Why Data Processing is Vital for Business Intelligence

Data is the core of modern businesses, fueling decision-making processes and driving operational efficiency. Organizations must process data efficiently and accurately to harness its full potential. This is where data processing for business intelligence (BI) becomes relevant, transforming raw data into actionable insights that provide a competitive edge in the market. Here's why data processing is crucial for BI.

📑 Clean and standardize the data

Data from different sources often come in varying formats and may contain errors, duplicates, or inaccurate data. Data transformation ensures data cleanliness and standardization, eliminating inconsistencies and making data accurate, reliable, and relevant for analysis.

🎛️ Integrate data from multiple sources

Data warehouses involve consolidating data from multiple sources into a unified view. Data transformation is necessary to integrate diverse data sources seamlessly, ensuring consistency and compatibility. By aligning the data, businesses can derive meaningful insights and comprehensively analyze the landscape.

💻 Establish a Business-Driven Data Mode

A successful data warehouse requires a well-defined data model aligning with the organization's business requirements. Data transformation is vital in creating and shaping this model, including aggregating data, deriving calculated fields, and eliminating unnecessary information. This step ensures that the data model supports efficient analysis and reporting.

😎 Enhance Performance

Data warehouse systems are designed to handle vast amounts of data and deliver fast and efficient analysis capabilities. Data transformation contributes to performance optimization by reducing data size, creating indexes, and aggregating data. These techniques improve system responsiveness, enabling timely decision-making, and smooth analytical processes.

📝 Prepare the Data for Analysis

Data transformations prepare data for analysis and business intelligence activities. This step involves creating summary data, calculating new values, and transforming data into a format suitable for analysis. Businesses can effectively leverage their data to uncover valuable insights and make informed decisions by making these preparations.

You may be interested in these blogs:

Power BI Usage Metrics Across All Workspaces: Step by Step

Power BI Usage Metrics Across Workspaces using PBI Graph API: Part 2

Power BI Data Sources: A Detailed Look at Loading Your Data Into Power BI

Primary Methods of Processing Data

What is ETL (Extract-Transform-Load), and why is it used?

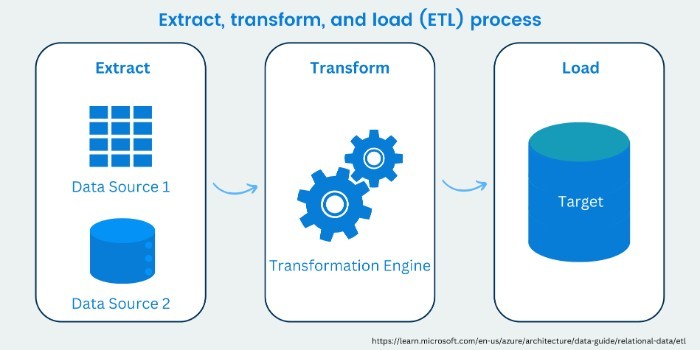

ETL (Extract - Transform -Load) is widely used to integrate data sources into a target database. The ETL process involves extracting data from various sources, transforming it to adhere to the target data regime, and loading data into the destination database. Developed in the 1970s, ETL remains a prevalent technique, particularly among on-premises database systems with limited processing capacity and low memory.

ETL is primarily used for cloud data integration and high-volume data processing:

• ETL is the leading method for cloud data integration, such as data from Salesforce and Dynamics 365. It enables businesses to move data between functions quickly and cost-effectively.

• ETL can process high-volume data efficiently. It is used primarily in traditional data warehouses to extract, transform, and load large datasets.

What is ELT (Extract - Load - Transform), and why is it used?

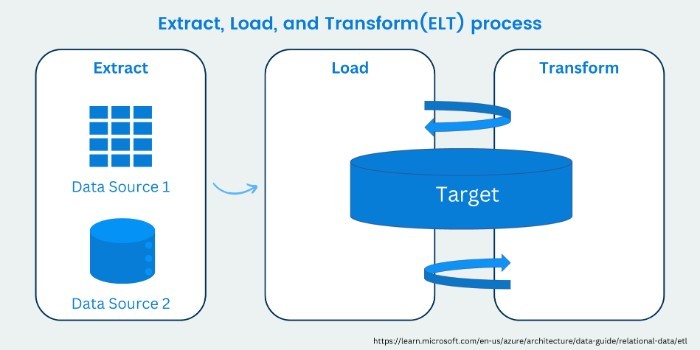

ELT (Extract - Load - Transform) is a data integration approach that emerged in the early 2000s as a response to the rise of powerful in-database processing capabilities in data warehousing systems. It involves transferring data between sources and targets without changing the business logic. ELT follows three stages: data extraction from sources, loading it into the target system, and performing transformations within it to meet data warehouse requirements. Implicit processes like normalization and data conversions are applied to ensure data compatibility. ELT provides a streamlined approach to data integration, offering flexibility and scalability for efficient data processing.

ELT is primarily used for data integration and data pipelines:

• Data integration involves capturing, mapping, transforming, and loading from multiple sources to the target system. With ELT, businesses can easily move large amounts of data between systems quickly and reliably.

• ELT plays a crucial role in data pipelines, where data is extracted from multiple sources, loaded into the target system, and transformed within the target environment. It ensures seamless data flow and facilitates real-time or near-real-time data processing.

Exploring ETL and ELT: A Comparative Analysis of Data-Processing

The critical difference between ETL and ELT lies in the order of the steps and where the data transformation occurs. ETL transforms the data before loading, while ELT loads the raw data first and performs the transformation within the data lake. ETL and ELT are two different approaches to data warehousing, each with its characteristics and considerations.

🔗 Flexibility:

- • ETL can be inflexible as changes to the transformations often require significant effort and can be time-consuming.

- • ELT is more flexible as it's easier to change the transformations as business requirements change.

⚖️ Scalability:

- • ETL can be less scalable as the transformations are performed outside the data warehouse.

- • ELT is more scalable as it's easier to use modern data warehousing systems' massive parallel processing capabilities.

👍 Data Quality:

- • ETL can help improve data quality by transforming it into a standard format.

- • ELT may require additional data quality checks in the data warehouse.

🖇️ Complexity:

- • ETL can be complex to design and implement, especially with heterogeneous data sources.

- • ELT can be simpler to design and implement but may require more advanced data warehousing skills.

💰 Cost:

- • ETL can be expensive to implement and maintain, often requiring specialized ETL tools and resources.

- • ELT can be less expensive, as they often use existing data warehousing tools and resources.

ETL and ELT in data warehousing offer different trade-offs regarding data latency, flexibility, scalability, data quality, complexity, and cost. Organizations should carefully evaluate their needs, data characteristics, and analytics requirements to determine which approach best aligns with their objectives.

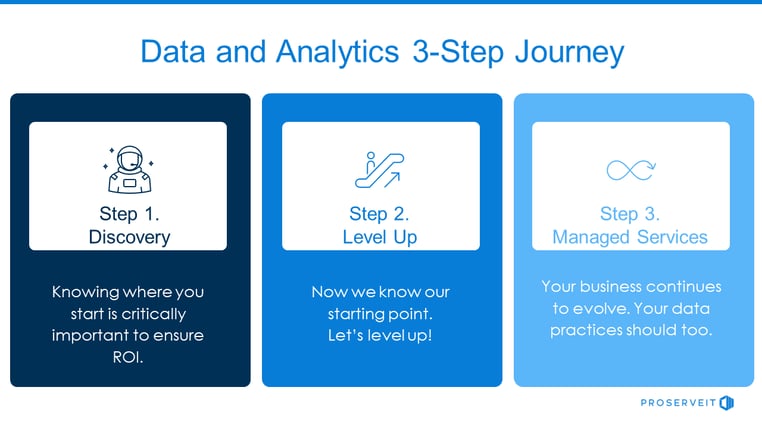

What’s Next? Data Customer Journey

At ProServeIT, we are committed to supporting you throughout your Data & Analytics Journey, from the initial discovery phase to reaching new levels of data maturity and providing ongoing managed services.

- • Evaluate and assess your existing data practices.

- • Determine your current data maturity level.

- • Develop a comprehensive implementation plan to enhance and elevate your data capabilities.

- • Gain insights into the costs and potential return on investment (ROI) associated with data processing.

Step 2. Level Up ☝️

- • Integrate data from diverse sources into a unified platform.

- • Establish a "single source of truth" to enable data-driven decision-making.

- • Build a robust foundational data infrastructure to support your Business Intelligence (BI) initiatives.

Step 3. Managed Service ⚙️

- • Continuously enhance your data practices and maximize ROI.

- • Receive strategic guidance from senior consultants every week.

- • Empower key stakeholders by involving them in the data management and decision-making.

Partner with ProServeIT today to unlock the full potential of your data, drive meaningful insights, and achieve success in your Data and Analytics initiatives.

Learn more and contact us today to start your Data & Analytics journey with ProServeIT !

Content from: ProServeIT Academy 2023 Microsoft Data Analytics Course 1 by Scott Sugar

Edited by: Hyun-Jin Im and Betty Quon

Tags:

June 23, 2023

Comments