Understanding Microsoft Copilot Agents: A New Era in AI Productivity

Artificial Intelligence (AI) is not just a trend, but the driving force behind the future of every industry—from healthcare to finance and retail. Just as a city's future depends on its infrastructure, the future of AI depends on a robust, purpose-built foundation. This foundation, known as AI infrastructure, is the key to shaping the future of businesses and unlocking the full potential of AI. In healthcare, AI infrastructure can power advanced diagnostic tools, in finance, it can enable predictive analytics for market trends, and in retail, it can drive personalized customer experiences.

Think of AI infrastructure as the nervous system of a business. Just like nerves carry signals from the brain to the body to enable movement, AI infrastructure handles the data, computations, and processing power that bring AI systems to life. For instance, it powers the algorithms that enable self-driving cars to navigate, virtual assistants to understand and respond to voice commands, and predictive analytics to forecast market trends. Without it, these AI applications would struggle to function.

The businesses that succeed in leveraging AI today are the ones investing in their AI infrastructure to stay competitive and drive innovation.

In this blog, we’ll take you through what AI infrastructure is, how it works, and why it’s becoming an essential investment for modern businesses looking to stay ahead.

In this blog, you will find:

AI Infrastructure vs. Traditional IT Infrastructure

Why Businesses Need AI Infrastructure

The Role of Cloud in AI Infrastructure

AI Infrastructure in Action: Real-World Applications

What Is AI Infrastructure?

At its core, AI infrastructure refers to the integrated hardware and software environment essential for supporting AI and machine learning workloads. It’s a combination of specialized hardware, software, and cloud solutions that work together to enable AI systems to process massive amounts of data, train models, and make intelligent decisions in real time.

According to IBM, AI infrastructure, also known as an AI stack, consists of the hardware and software necessary to create and deploy AI-powered applications like Copilot, ChatGPT, facial recognition, and predictive analytics. It’s the powerhouse that drives AI’s ability to transform industries.

In simple terms, think of AI infrastructure as the "engine room" for artificial intelligence—just like how a car engine powers a vehicle, AI infrastructure powers AI systems. Without the right infrastructure, AI wouldn’t have the computational strength or speed to perform tasks like processing large data sets or generating real-time insights.

What Are the Key Components of AI Infrastructure?

AI infrastructure includes several key elements that work together to handle the complex tasks that AI systems require:

✅ Compute Resources: AI relies on specialized hardware like Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) to handle the massive computational needs of machine learning (ML) models. GPUs, for example, allow AI systems to perform thousands of calculations simultaneously, speeding up tasks like image recognition and natural language processing.

✅ Data Storage and Processing: AI applications need to process and analyze vast amounts of data. This requires scalable storage systems (either cloud-based or on-premises) and data processing frameworks that can quickly clean and organize data for model training.

✅ Machine Learning Frameworks: Platforms like TensorFlow and PyTorch provide the software environments that developers use to build, train, and deploy AI models. These frameworks enable efficient data handling and model training, making them essential for AI infrastructure.

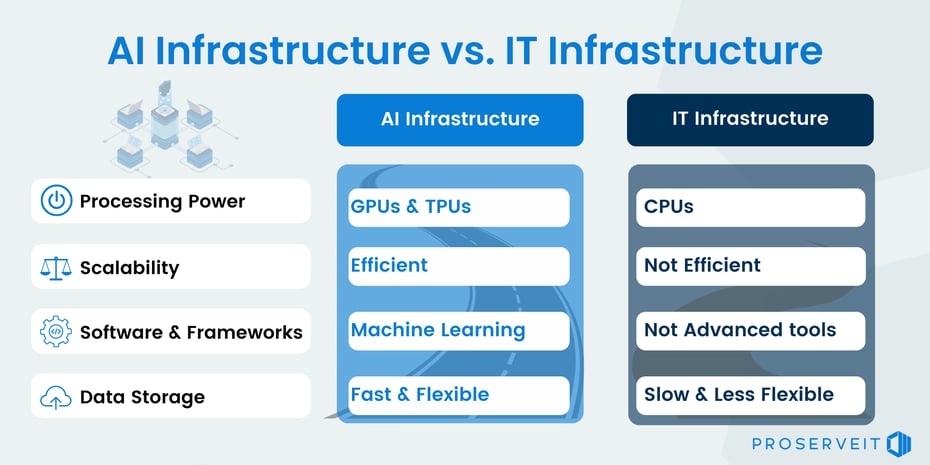

AI Infrastructure vs. Traditional IT Infrastructure

While AI infrastructure might sound similar to traditional IT infrastructure, they serve vastly different purposes. Traditional IT systems are designed to handle routine business tasks like email, data storage, and web hosting. In contrast, AI infrastructure is built to power advanced AI applications like machine learning, natural language processing, and real-time data analytics.

The importance of AI infrastructure lies in its role as a crucial enabler for successful AI and machine learning operations.

A Simple Comparision: Highways vs. Dirt Roads

Think of AI infrastructure like a high-speed, multi-lane highway designed for fast, uninterrupted traffic, while traditional IT infrastructure is more like a narrow dirt road—it works for smaller, everyday tasks but struggles when it comes to handling larger, more complex loads. Traditional IT systems rely on Central Processing Units (CPUs), which are like cars driving on this dirt road. They’re fine for basic computing tasks but lack the speed and efficiency to process the massive amounts of data required for AI.

AI infrastructure, on the other hand, leverages graphics processing units (GPUs) and tensor processing units (TPUs), which are high-speed vehicles on a highway and are built to handle heavy data traffic quickly and efficiently. These hardware accelerators are designed to process thousands of operations simultaneously, making them ideal for tasks like deep learning and real-time data analytics.

As AI models grow in complexity and scale, GPUs and TPUs become essential for ensuring scalability and performance. According to Hewlett Packard Enterprise (HPE), GPUs excel at parallel processing, allowing AI systems to train models faster and more accurately. TPUs, created specifically for AI tasks, handle the vast amounts of tensor calculations required for machine learning at even greater speeds.

For enterprises relying on cloud-based solutions like Microsoft Azure or Google Cloud, the integration of GPUs and TPUs enables them to scale AI workloads dynamically, ensuring high performance without the need for costly, on-premises hardware. These specialized units not only improve the speed of computations but also enhance cost-efficiency by offering pay-as-you-go scalability.

This flexibility allows businesses to scale AI operations up or down based on their needs, making cloud-based AI infrastructure the ideal solution for today's data-driven enterprises.

Key Differences Between AI and IT Infrastructure

☑️ Processing Power: Traditional IT systems rely on CPUs, which are excellent for general-purpose computing. However, AI infrastructure uses GPUs and TPUs, which are designed for parallel processing, allowing AI systems to perform multiple operations simultaneously and at much higher speeds.

☑️ Scalability: Traditional IT setups often struggle with scaling efficiently when handling AI workloads. In contrast, AI infrastructure is cloud-based, offering the ability to scale up or down depending on the demands of AI applications.

☑️ Software and Frameworks: AI infrastructure includes specialized software and machine learning frameworks. Traditional IT environments often lack these advanced tools and capabilities.

☑️ Data Storage: AI workloads require fast, scalable storage systems capable of managing massive datasets. Traditional IT infrastructure often relies on slower, less flexible on-premise solutions, while AI infrastructure leverages cloud storage for speed, scalability, and flexibility.

Why Businesses Need AI Infrastructure

Investing in AI infrastructure is essential for companies looking to harness the full potential of artificial intelligence and big data. Traditional IT environments, primarily built around CPUs, struggle to meet the demands of AI-driven applications, especially those that involve large-scale machine learning and deep learning tasks. Microsoft highlights in its 2024 State of AI Infrastructure report that many businesses face significant roadblocks in AI projects because their existing systems aren’t designed to handle the complexity and scale of modern AI workloads.

With 95% of organizations planning to expand their AI usage in the coming years, according to Microsoft, it’s clear that the infrastructure supporting these initiatives will be critical for maintaining a competitive edge.

Key Components of AI Infrastructure: Data Storage

AI infrastructure is built on several core components that enable it to meet the demands of artificial intelligence tasks. These components support everything from data-heavy applications to advanced machine learning models.

1. Compute Power: The Engine of AI

AI relies on Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) to handle the heavy computational load. GPUs excel at parallel processing, enabling faster execution of tasks like image recognition, while TPUs are optimized for deep learning tasks, offering faster performance for training AI models.

2. Data Storage and Processing

AI models require large datasets, and cloud-based storage solutions such as Azure Blob or Google Cloud Storage provide the scalability to store and access this data. Data processing frameworks like Apache Spark ensure that raw data is cleaned and organized, preparing it for AI model training.

3. Machine Learning Frameworks

Frameworks like TensorFlow and Microsoft ML.NET. provide the environment for building and deploying AI models. These frameworks allow developers to accelerate AI projects, supporting various machine learning tasks and optimizing the use of GPUs for faster model training.

4. MLOps Platforms

To streamline the AI lifecycle, MLOps platforms such as Azure Machine Learning automate tasks like data validation and model monitoring, making it easier for teams to manage AI projects from development to deployment.

These components work together to create an efficient and scalable AI infrastructure, empowering businesses to innovate and grow.

💡Why These Components Matter

Together, these components form a cohesive system that allows AI infrastructure to process vast datasets, run complex models, and scale according to business needs. By investing in these technologies, businesses can ensure that their AI applications not only run efficiently but also drive innovation and long-term growth.

The Role of Cloud in AI Infrastructure

In today's digital landscape, the cloud plays an essential role in supporting the infrastructure needed for AI applications. While traditional on-premises systems can struggle with the immense computational demands of AI, cloud-based AI infrastructure provides the scalability, flexibility, and performance necessary for modern AI tasks.

Why AI Needs Cloud Infrastructure

As businesses expand their use of AI, the computational power and data storage requirements grow exponentially. On-premises systems, while once the standard for IT infrastructure, are often costly and inefficient when it comes to handling AI workloads. They can become bottlenecks for AI innovation, requiring significant investments in hardware and maintenance. AI workloads can tie up these systems for days, making it difficult to scale AI projects effectively.

The cloud, by contrast, offers a much more flexible solution. According to the information provided by IDC research, the lack of AI-specific infrastructure is one of the main reasons AI projects fail. The cloud eliminates many of the constraints of traditional IT systems by offering the power and scale AI demands without the cost and burden of on-prem infrastructure.

Cloud infrastructure is designed for massive parallel processing, which allows AI models to be trained faster and with greater accuracy. For instance, cloud-based GPUs and TPUs, which are engineered specifically for AI tasks, enable businesses to process huge datasets, train machine learning models, and deploy AI applications at a fraction of the time it would take on traditional systems.

Benefits of Cloud AI Infrastructure: Parallel Processing Capabilities

.png?width=930&height=466&name=Benefits%20of%20Cloud%20AI%20Infrastructure%20(1).png)

1. Scalability

One of the key advantages of cloud AI infrastructure is its ability to scale up or down based on the needs of the business. If a company suddenly needs to process large amounts of data for a new AI project, the cloud allows them to easily increase computing power without investing in additional hardware. Once the project is complete, they can scale back down, ensuring they only pay for what they use.

Think of it as a "pay-as-you-go" model for computing power, where companies can dynamically adjust their resource needs without being tied to the constraints of physical infrastructure.

2. Performance

Cloud platforms like Azure AI infrastructure are optimized for high-performance computing. They provide a seamless environment that integrates AI services, machine learning tools, and open-source frameworks. This ensures that AI applications run efficiently and can be developed and deployed at speed.

3. Cost Efficiency

With cloud AI infrastructure, businesses save significantly on costs. There is no need for large upfront investments in hardware, and companies avoid the ongoing maintenance costs associated with traditional IT systems. According to Azure’s full-stack AI solution, companies have realized substantial savings by moving their AI workloads to the cloud, reducing infrastructure costs by 30% or more.

4. Flexibility

Cloud-based AI infrastructure offers unparalleled flexibility. Businesses can choose from a variety of tools and resources that best fit their needs, such as machine learning frameworks like TensorFlow or PyTorch, or platforms like Azure Machine Learning. This flexibility allows companies to experiment and innovate without being locked into specific hardware or software solutions.

5. Security and Compliance

One of the biggest concerns for businesses adopting AI is the security of their data. Cloud providers like Azure offer multi-layered security controls designed to protect sensitive information while ensuring compliance with data privacy regulations. Whether it's encrypting data or safeguarding virtual networks, the cloud offers robust security features that traditional IT systems struggle to match.

How Cloud AI Infrastructure Supports Generative AI

A growing area of AI innovation is generative AI, which includes applications like ChatGPT that create content such as text, images, or even video. Cloud infrastructure is critical to generative AI because it requires an immense amount of processing power and storage to manage the data and training models.

Cloud platforms, with their scalability and computing capabilities, provide the perfect environment for developing and deploying generative AI solutions. For instance, Azure AI services support enterprises in building generative AI applications, enabling them to innovate faster while keeping costs in check.

💡 Why Cloud Matters for AI Infrastructure

In summary, cloud infrastructure is the key to unlocking the full potential of AI. It provides businesses with the scalability, flexibility, and performance needed to keep up with the rapidly growing demands of AI workloads. By moving to the cloud, companies can drive innovation, reduce costs, and ensure their AI projects have the infrastructure they need to succeed.

AI Infrastructure in Action: Real-World Applications

While the concept of AI infrastructure may seem abstract, its impact is very tangible. Businesses across industries are leveraging AI infrastructure to streamline operations, enhance customer experiences, and drive innovation. Let’s explore some real-world applications that demonstrate the power of AI infrastructure.

🚑 Healthcare: Improving Patient Care with AI

AI infrastructure is transforming healthcare by enabling faster, more accurate diagnoses and enhancing patient care. For example, Nuance, a healthcare technology company, uses Azure AI infrastructure to power its Dragon Ambient eXperience (DAX) solution, which automates medical documentation. By processing and analyzing vast amounts of patient data in real time, the solution allows doctors to spend more time with patients while ensuring compliance and privacy.

In this case, AI infrastructure supports massive data handling, model training, and deployment, improving healthcare outcomes by providing medical professionals with the tools they need to make informed decisions quickly.

👗Fashion: Accelerating Design with AI

In the fashion industry, Fashable uses AI to revolutionize the design process. Leveraging Azure’s full-stack AI infrastructure, Fashable has reduced the time it takes to create fashion designs from months to mere minutes. This kind of AI infrastructure allows the company to scale its operations while maintaining low costs, proving that even startups can harness the power of AI with the right tools.

By integrating machine learning and cloud-based infrastructure, Fashable can generate new designs rapidly, helping designers bring innovative ideas to market faster.

🚗Autonomous Vehicles: Scaling AI for Innovation

In the autonomous vehicle space, Wayve relies on Azure Machine Learning and AI infrastructure to develop the next generation of self-driving cars. The company uses AI models to process real-time data and make driving decisions, and the scale provided by Azure allows Wayve to train these models 90% faster than with traditional infrastructure.

For businesses in high-tech industries, having scalable AI infrastructure is crucial to support the enormous computational demands of deep learning models and innovation-driven tasks.

The Cost of AI Infrastructure

While building AI infrastructure may seem costly, it is a necessary investment for businesses that want to stay competitive. The good news is that with cloud-based solutions, businesses can reduce upfront costs and only pay for the resources they use.

✅ Cloud-Based AI: A Flexible Investment

One of the primary advantages of cloud-based AI infrastructure is its pay-as-you-go model. Instead of purchasing expensive hardware and managing it in-house, businesses can leverage the scalability of cloud providers like Azure, AWS, or Google Cloud to increase or decrease computing power as needed. This flexibility allows companies to avoid over-investing in resources they won’t always need while ensuring they can scale up when demand rises.

Businesses have indeed reported significant cost savings, up to 30% annually, after moving their AI workloads to Azure’s full-stack AI platform. This reduction in cost comes from better resource utilization, less maintenance, and the ability to scale services efficiently.

✅ On-Premises AI Infrastructure: When It Makes Sense

While cloud AI infrastructure is often more flexible and cost-effective, there are instances where on-premises infrastructure may still be a better fit, particularly for industries with stringent security and compliance requirements, such as finance and healthcare. In these cases, investing in on-premises infrastructure can offer more control over data and performance, though it comes at a higher cost and less flexibility compared to the cloud.

✅ ROI Considerations

Businesses considering investing in AI infrastructure should also factor in the long-term return on investment (ROI). While the initial cost of transitioning to AI infrastructure might seem high, the benefits—such as improved decision-making, faster time-to-market, and enhanced operational efficiency—can far outweigh the costs.

By leveraging AI cloud infrastructure, businesses not only reduce their operational expenses but also create opportunities for growth through AI-driven innovations.

Ready to unlock the full potential of AI for your business?

Contact ProServeIT today for expert guidance on building scalable, cost-effective AI infrastructure tailored to your specific needs. Let us help you drive innovation and efficiency while maximizing your ROI with the right AI strategy.

Get in touch with ProServeIT to start your AI journey!

Future of AI Infrastructure

The future of AI infrastructure is likely to be shaped by a range of trends and technologies, including:

☑️ Cloud Computing: Cloud computing is likely to play an increasingly important role in AI infrastructure, providing scalable and flexible resources for AI workloads. Cloud platforms offer the computational power and storage needed to support large-scale AI applications.

☑️ Edge Computing: Edge computing is likely to become more important for AI infrastructure, providing real-time processing and analysis of data at the edge of the network. This can reduce latency and improve the performance of AI applications that require real-time decision-making.

☑️ Quantum Computing: Quantum computing is likely to have a significant impact on AI infrastructure, providing new capabilities for complex computations and simulations. Quantum computers can solve problems that are currently intractable for classical computers, opening up new possibilities for AI applications.

☑️ Autonomous Systems: Autonomous systems are likely to become more prevalent in AI infrastructure, providing real-time monitoring and maintenance of AI systems. These systems can help ensure that AI infrastructure operates efficiently and reliably.

☑️ Explainable AI: Explainable AI is likely to become more important for AI infrastructure, providing transparency and accountability for AI decision-making. This can help build trust in AI applications and ensure that they are used responsibly.

By staying ahead of these trends and technologies, organizations can build a future-proof AI infrastructure that supports their AI initiatives and drives innovation.

Conclusion: AI Infrastructure as a Competitive Advantage

As AI continues to shape the future of business, having the right infrastructure in place is no longer optional—it’s essential. AI infrastructure provides the computational power, scalability, and flexibility needed to drive innovations in every sector, from healthcare to finance to manufacturing. By investing in purpose-built AI infrastructure, businesses can position themselves to lead in their industries, whether they’re developing cutting-edge products, optimizing operations, or improving customer experiences.

Companies that embrace AI infrastructure will be able to move faster, innovate more effectively, and deliver better results.

For decision-makers, now is the time to assess your current systems, explore cloud-based AI infrastructure options, and position your company for long-term success in the AI-powered future.

Are you ready to unlock the full potential of AI for your business? Reach out today to learn more about building a robust AI infrastructure that can scale with your business and deliver real results.

Tags:

October 07, 2024

Comments